What’s your name?

Hi, I am Sara Salimzadeh.

Citation

Sara Salimzadeh, Gaole He, and Ujwal Gadiraju. 2024. Dealing with Uncertainty: Understanding the Impact of Prognostic Versus Diagnostic Tasks on Trust and Reliance in Human-AI Decision-making. In Proceedings of the CHI Conference on Human Factors in Computing Systems (CHI ’24), May 11–16, 2024, Honolulu, HI, USA. ACM, New York, NY, USA, 17 pages. https://doi.org/10.1145/3613904.3641905

Link to the paper: https://sara.salimzadeh.com/publications/CHI2024.pdf

If you had to describe this work in one sentence, what would you tell someone?

Given that we collaborate with AI systems in various areas of our lives and professions to make informed decisions, our research delves into understanding how task complexity and uncertainty could impact our behaviour.

What problem is this research addressing, and why does it matter?

Do we rely more on AI capabilities in highly complex and uncertain scenarios, or do we prefer to trust our own judgment? Previous studies have investigated how various human factors and AI abilities influence the effectiveness of collaboration between humans and AI in decision-making processes. However, there is limited understanding of how task-related factors influence users’ confidence and reliance on AI systems. This information is essential for creating human-AI collaboration systems that can effectively support decision-making in various domains as per users’ needs.

How did you approach this problem?

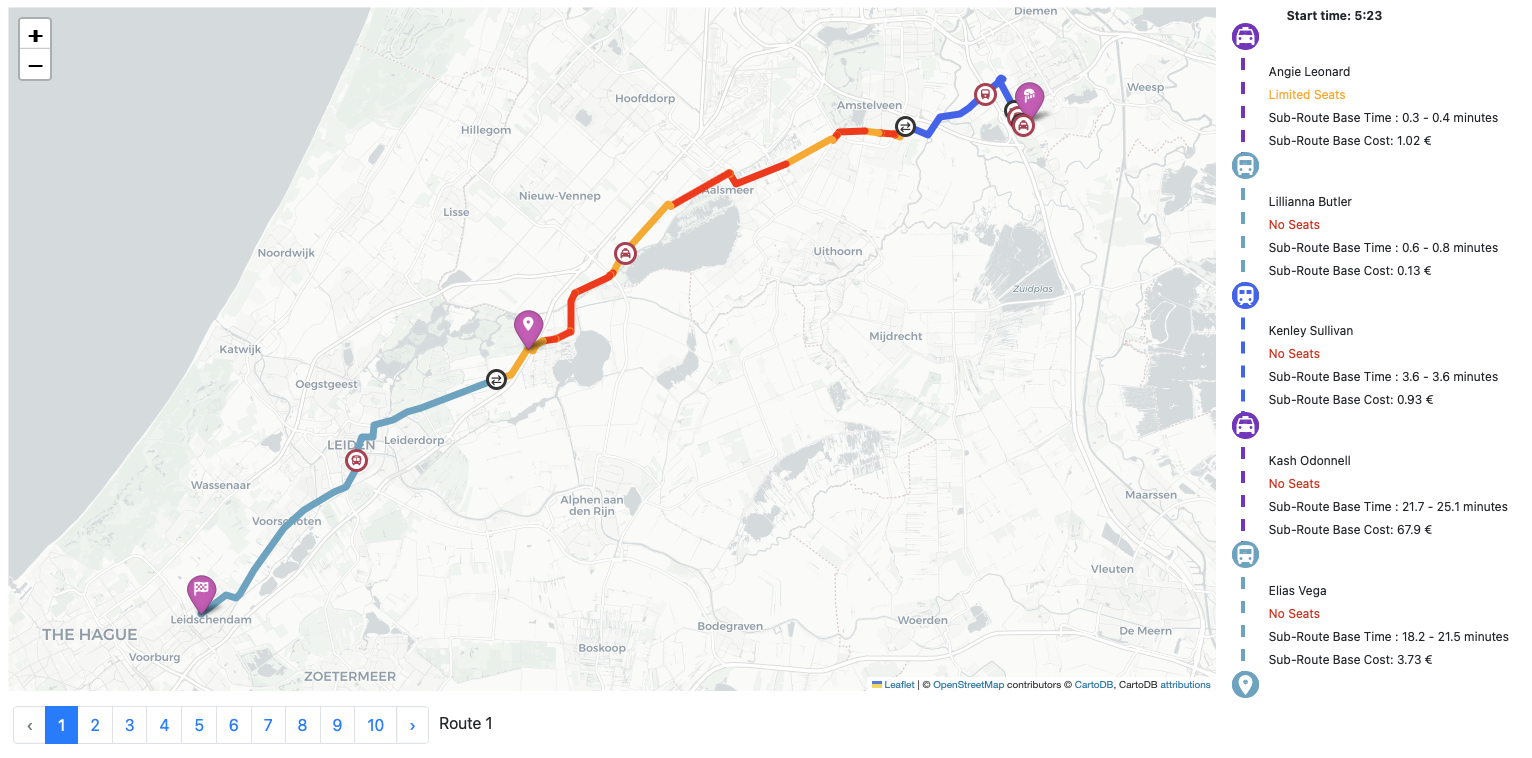

We designed and implemented a study where participants were tasked with making decisions in trip-planning scenarios, varying in complexity and uncertainty levels. We manipulated complexity by considering three relative levels of low, medium, and high complexity based on the number of factors to consider. We also considered two distinct levels of uncertainty – low uncertainty (diagnostic) and high uncertainty (prognostic) based on the source of information available. A total of 258 people participated in our experiment, randomly assigned to one of six experimental conditions. We chose the trip-planning scenario for its real-life relevance, allowing us to manipulate complexity and uncertainty systematically. We then assessed participants’ trust and reliance on the AI system by analyzing their decisions, system interaction, and questionnaire responses.

What were your key findings?

Our key findings indicate that participants relied more on AI systems in high complexity scenarios as well as in situations with higher uncertainty (prognostic tasks). However, when faced with high complexity or uncertainty, people tend to lose confidence in their own judgment and are more inclined to follow AI systems unquestioningly. On the other hand, their trust in AI remained relatively stable across all conditions, suggesting that it is not the only factor influencing reliance on AI systems.

What is the main message you’d like people to take away?

Our work highlights the importance of considering the task context when designing AI systems and empirical studies to understand how humans interact with such systems. Our finding is a call to action for AI developers, researchers, and practitioners to develop methods and techniques to help users better understand and interpret AI system outputs, especially in highly complex and uncertain situations, to promote informed decision-making and prevent blind reliance on AI systems.

What led/inspired you to carry out this research?

The widespread use of ChatGPT and similar Large Language Models has led me to notice a growing dependence on AI systems among those around me. People rely on these systems for a wide range of tasks, from personal assistants to medical diagnoses, without fully acknowledging the potential risks and unknown factors involved. This motivated us to explore how their attitudes and actions could be influenced by the nature of their tasks, especially in terms of complexity and uncertainty. Do they modify their actions based on the specifics of the task requirements? Or do they blindly follow AI systems regardless of the task context? What if the AI system’s suggestions conflict with their own judgment?

What kind of skills/background would you say one needs to perform this type of research?

To perform this type of research, one would need a background in computer science, human-computer interaction, cognitive psychology, or a related field. Understanding quantitative research methods, statistical analysis, and experimental design is also crucial for conducting rigorous studies in this area. Additionally, knowledge of AI systems and their underlying technologies and an understanding of human decision-making processes and trust dynamics would be beneficial in designing and carrying out research in this field.

Any further reading you recommend?

Vivian Lai, Chacha Chen, Alison Smith-Renner, Q. Vera Liao, and Chenhao Tan. 2023. Towards a Science of Human-AI Decision Making: An Overview of Design Space in Empirical Human-Subject Studies. In Proceedings of the 2023 ACM Conference on Fairness, Accountability, and Transparency (FAccT ’23). Association for Computing Machinery, New York, NY, USA, 1369–1385. https://doi.org/10.1145/3593013.3594087

Gaole He, Lucie Kuiper, and Ujwal Gadiraju. 2023. Knowing About Knowing: An Illusion of Human Competence Can Hinder Appropriate Reliance on AI Systems. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems (CHI ’23), April 23–28, 2023, Hamburg, Germany. ACM, New York, NY, USA, 18 pages. https://doi.org/10.1145/3544548.3581025

Alexander Erlei, Abhinav Sharma, and Ujwal Gadiraju. 2024. Understanding Choice Independence and Error Types in Human-AI Collaboration. In Proceedings of the CHI Conference on Human Factors in Computing Systems (CHI ’24), May 11–16, 2024, Honolulu, HI, USA. ACM, New York, NY, USA, 19 pages. https://doi.org/10.1145/3613904.3641946

Your biography

I am a PhD Candidate in Computer Science at Delft University of Technology. My research focuses on identifying factors that influence human trust and reliance on AI systems, particularly in decision-making contexts. I am passionate about designing techniques to promote informed decision-making and mitigate potential risks associated with AI technologies. I am actively involved in implementing user-centered interfaces and conducting user studies to understand human-AI interaction.

I am finishing up my PhD in July and I am currently seeking data scientist positions in the industry where I can continue to explore the intersection of human decision-making and AI technologies. My previous engineering education has provided me with a strong foundation in programming skills and designing software systems, while my research experience has allowed me to delve deeper into the psychological and technical aspects of human-AI interaction.

Follow me on X: @SaraSalimzadeh

Website: https://sara.salimzadeh.com/